Problem Statement

The aim of this project was to develop an easy to use model that could be used to recognize hand gestures in real-time. A goal for this project is to allow the user to be able to alter the codebase and retrain the model to recognize custom gestures.

Background Research and Design Decisions

To determine the best approach for this project, various machine learning models and architechtures were researched. The first step was to decide what type of input data would be used. Since the goal of this project was to recognize hand gestures, it made sense to use image data as input. However, in order to allow the user to be able to create new gestures and retrain the model, a method of data collection that was simple to achieve was needed. Therefore, rather than using raw images as input, it was decided to use hand landmark coordinates as input. This would allow the user to easily collect data of themselves performing the gestures they want to recognize. To achieve this, OpenCV and MediaPipe Hands were used to detect hand landmarks in real-time. This provided a set of 21 3D coordinates for each hand detected in the image. These coordinates could then be used as input to the machine learning model.

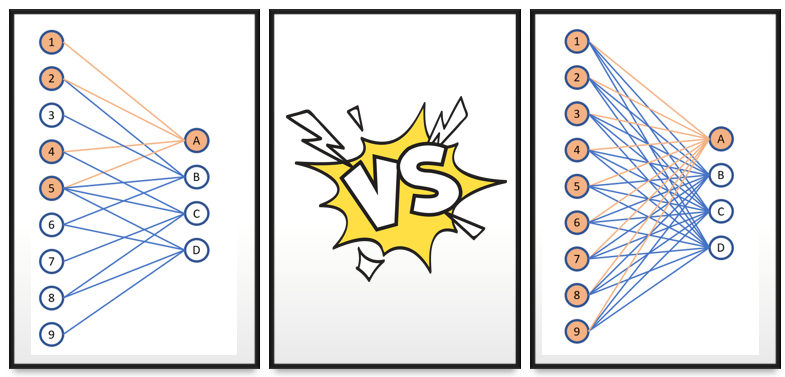

Now that the input data was determined, the next step was to decide on a model architechture. The first model that was researched was the Convolutional Neural Network (CNN). This type of model is commonly used for image classification which would have seemed fitting for this project. However, since the data is no longer in image form, where spatial relationships between pixels are important, a CNN would not be the best choice. This is because in this dataset, each input feature (landmark coordinate) should have some relationship with every other input feature. To achieve better performance, a Fully Connected Neural Network (FCNN) was chosen instead. This type of model allows for every input feature to be connected to every output feature, allowing for more complex relationships to be learned. Furthermore, with the 21 landmarks each having 3 coordinates (x, y, z), the input layer would have 63 nodes which would mean that the FCNN would be lightweight and efficient, making it suitable for real-time applications, whereas a CNN would add unnecessary complexity without making use of its strengths.

How wieghts are distributed in a CNN vs FCNN

Data Collection

Using Opencv and Media Pipe Hands, CreateGestureData.py was created to collect hand landmark data and save it to a csv file. This is done by using the webcam to capture video frames in real-time. The user can press a button to indicate which gesture they are performing, and the corresponding hand landmark data is saved to the csv file. The user can perform multiple gestures and collect as much data as they want. The more data collected, the better the model will perform. Once the user is satisfied with the amount of data collected, they can stop the program and use the csv file to train the model.

Future Steps

- • Integration with Rasberry Pi projects using the Pi camera